Zero-failure replication service

Contents

Requires EVA ICS Enterprise.

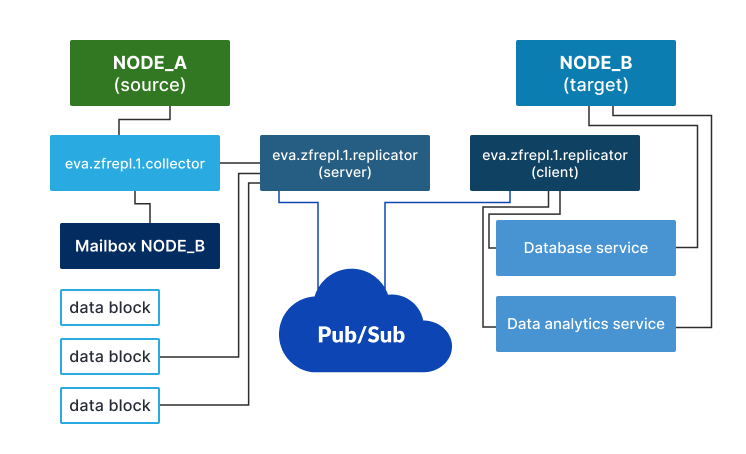

Zero-failure replication service solves a typical IoT problem, when real-time data is lost in cases if pub/sub target is offline or a source has temporally no connection with pub/sub.

The service provides a second replication layer, in addition to Replication service, which 100% guaranties that all telemetry data is transferred to the target node, unless deleted as expired.

The service is a perfect helper to fill all gaps in logs, charts or any other kind of archive data representation, collection or analysis.

The service can work in 3 roles (only one can be defined in the deployment config):

Service roles

Collector

Collects real-time data for local items and stores them into blocks of the subscribed mailboxes. The mailboxes must be called same as the remote nodes, which collect the data.

The mailbox blocks have compact and crash-free format with serialize+CRC32 scheme, which allows processing all available frames in the block unless a broken one is detected.

Telemetry data is known to be compressed well so it is highly recommended to compress blocks when transferred (the service client applies BZIP2-compression automatically).

Additionally, if replication blocks are lost but there is a history database service on a local node (e.g. InfluxDB state history or SQL databases state history), the collector may be asked to fill a mailbox with blocks from the database (see mailbox.fill).

The service performing the collector role is always online.

Replicator

Allows to setup mailbox replication, based on a flexible custom schedule (e.g. every minute, at night only etc.).

Automatically collects replication blocks from remote nodes and pushes them to the local bus replication archive topic (ST/RAR/<OID>).

Requires a Pub/Sub server (PSRT or MQTT). Both source and target node must share the same API key. The API key is used to check a particular service configuration-mapped mailbox access only and can have an empty ACL. While being usually deployed together with Replication service, uses a dedicated connection (or a dedicated server).

Transfers blocks compressed and encrypted.

Warning

The replicator role MUST be deployed on the same machine as the collector.

The replicator client may fetch both prepared-to-replicate blocks as well as the current collector block. In the last case, the block is forcibly rotated. This means if the mailbox replication schedule is set as continuous, the replication frequency is nearly equal to the block requests interval set.

The service performing the replicator role is automatically restarted on pub/sub failures.

Standalone

Allows to import manually copied blocks only (see process_dir).

To process the block directory manually, use:

eva svc call eva.zfrepl.1.replicator \

process_dir path=/path/to/blocks node=SOURCE_NAME delete=true

# or using the bus CLI client

/opt/eva4/sbin/bus /opt/eva4/var/bus.ipc rpc call eva.zfrepl.1.replicator \

process_dir path=/path/to/blocks node=SOURCE_NAME delete=true

The service performing the standalone role is always online.

Recommendations

Large blocks may cause database service data-flooding on target nodes. Make sure these services have enough resources and bus queue size set.

Keep data blocks small (2-3MB). Approximately, telemetry data is compressed 10x but the ratio may vary depending on setup.

If large amount of blocks is generated, increase block_ttl_sec mailbox collector field.

mailbox.fill may cause significant disk/event queue overhead. Make sure the collector service has:

enough bus queue

enough file ops queue

if huge network load is expected (e.g. equipment, connected to the node, is reconfigured) because of lots of real-time data, a service, which runs under the replicator role may be temporally disabled:

eva svc call eva.zfrepl.1.replicator disable

# or using the bus CLI client

/opt/eva4/sbin/bus /opt/eva4/var/bus.ipc rpc call eva.zfrepl.1.replicator disable

When disabled, the service stops all local replication client tasks (which must be later triggered either by schedulers or manually) and forbids serving blocks via pub/sub for external clients. Other methods and tasks are not affected.

To enable the service back, repeat the above command with “enable” method or restart it.

Untrusted nodes and zero-failure replication

The approach is similar to real-time replication: by default remote zero-failure replication mailboxes are trusted, which means all remotes can provide telemetry data for all items.

To setup zero-failure replication with an untrusted node, mark its mailbox with “trusted: false” in the replicator/client section of the service configuration and make sure the configured API key has ACL with “write” permission for the allowed items.

Strategies

Zero-failure replication offers the following strategies, the perfect one should be selected, based on data importance, data amount, communication speed and other customer’s requirements.

Continuous

The default (most reliable) strategy, when zero-failure replication acts as a second replication layer. Recommended for mission-critical setups when high-speed communication between nodes is available.

As all data is always replicated, self_repair in clients is not required.

Manual

Collector mailboxes options auto_enabled must be set to false.

Used in case of any problems, a system administrator runs “mailbox.fill” method manually on secondary nodes to fill data gaps:

eva svc call eva.zfrepl.default.collector mailbox.fill i=NAME t_start=TIMESTAMP t_end=TIMESTAMP

Source-forced

Collector mailboxes options auto_enabled must be set to false.

A secondary node automatically enables/disables mailboxes, e.g. in case of network failure.

If communication issues are detected with delay or the target node is unavailable, the strategy may be unreliable and require manual assistance.

E.g. the following shell command, which can be started using the system cron, disables/enables all collector’s mailboxes according to network state:

ping -c1 PUBSUB_HOST && \

svc call eva.zfrepl.default.collector mailbox.disable "i=*" || \

svc call eva.zfrepl.default.collector mailbox.enable "i=*"

Another example, the following Python lmacro disables/enables all mailboxes when the default real-time replication service is unavailable:

try:

rpc_call('test', _target='eva.repl.default')

rpc_call('mailbox.disable',

i='*',

_target='eva.zfrepl.default.collector')

except:

rpc_call('mailbox.enable',

i='*',

_target='eva.zfrepl.default.collector')

The scenario can be started with a specific schedule using “jobs” feature of Logic manager.

Self-repairing

Collector mailboxes options auto_enabled must be set to false.

Advanced configuration, which uses smart algorithms to replicate missing data only. Recommended for setups with slow/expensive communication between nodes.

mailboxes on the source (server) must have allow_fill option set to true to enable target requesting data blocks

client configurations must have self_repair section configured

repair_interval in self_repair section must be set if self-repairing is planned to be done automatically

the source must have a “heartbeat” - an item (e.g. a sensor) which is always updated within a specific interval, e.g. PLC timestamp. If connected equipment can not provide such, a generator can be used:

eva generator source create heartbeat --target sensor:NODE/heartbeat time

it is recommended to set skip_disconnected option of the target telemetry database service to true to prevent writing state of the heartbeat item when real-time replication is unavailable.

Limitations:

self-repairing can find data gaps only inside range configured. If a gap starts before the range, it is ignored. Consider increasing range value.

to prevent data flood in case if the client can not download blocks between self-repairing checks (e.g. a scheduler is used), mailbox fill requests are sent to secondaries only once for each gap found. This may cause data loss, e.g. if the source node is restarted before the fill request is completed or in similar cases. In case of data loss, start self-repairing manually, with force option if required.

Starting self-repairing manually:

eva svc call eva.zfrepl.default.replicator client.self_repair i=NODE

# forcibly fill all gaps, ignore tasks cache

eva svc call eva.zfrepl.default.replicator client.self_repair i=NODE force=true

Setup

Use the template EVA_DIR/share/svc-tpl/svc-tpl-zfrepl.yml:

# EVA ICS zero-failure replication service

command: svc/eva-zfrepl

workers: 1

bus:

path: var/bus.ipc

config:

# the service can work in three roles:

#

# collector - collects data from the local node bus events to mailboxes,

# always online. Must have the "collector"

#

# standalone - allows only to import manually copied blocks from a local dir

#

# replicator - serves and collects the data from the mailboxes via pub/sub,

# MUST be deployed on the same machine as the collector. Must have the

# "replicator" section

collector:

# mailboxes location, relative to EVA_DIR or absolute. if running under a

# restricted user account (default: eva), the directory MUST be created

# manually and the effective account must have read/write/execute (list)

# permissions to it

path: runtime/zfrepl/spool

# default database service ID for mailbox.fill without eva.db. prefix, e.g.

# "db1" for eva.db.db1

#default_history_db_svc: default

mailboxes:

node1:

# set false to disable auto collection

#auto_enabled: false

# max data block size (uncompressed)

max_block_size: 2_000_000

# block time-to-live (sec) before creating a new block

block_ttl_sec: 600

# keep unrequested blocks for (sec)

keep: 86400

# file ops max queue size, if full, incoming events are dropped

queue_size: 512

auto_flush: false

# periodic collection interval

interval: null

# do not submit remote disconnected items (useful for zfrepl or similar)

skip_disconnected: false

# ignore real-time events

ignore_events: false

# oids to watch

oids:

- "#"

# oids to exclude

oids_exclude: []

# DANGEROUS, enable for multi-level clusters only

#replicate_remote: true

#standalone: {}

#replicator:

#pubsub:

## mqtt or psrt

#proto: psrt

## path to CA certificate file. Enables SSL if set

#ca_certs: null

## single or multiple hosts

#host:

#- 127.0.0.1:2873

## if more than a single host is specified, shuffle the list before connecting

#cluster_hosts_randomize: false

## user name / password auth

#username: null

#password: null

#ping_interval: 10

## pub/sub queue size

#queue_size: 1024

## pub/sub QoS (not required for PSRT)

#qos: 1

## the local key service, required both to make and process API calls via PubSub

#key_svc: eva.aaa.localauth

#client:

## watch the services, if any is down, client operations are suspended

#watch_svcs:

#- eva.db.i1

#- eva.db.i2

#mailboxes:

## collect data from the mailbox at node_remote (mailbox name = local system name)

#node_remote:

## API key, required to open the mailbox

#key_id: default

## a cron-like schedule, when the client is triggered:

## second minute hour day month weekday year

##

## the year field can be omitted

## to run the task every N, use */N

#schedule: "* * * * * *"

## block requests interval (sec). it is recommended to set the interval

## lower than block ttl on the remote node collector

#interval: 30

## client session duration (sec). after the specified perioid of time the

## client stops, until triggered again manually or by the scheduler

#duration: 3600

#timeout: 60 # override the default timeout

#trusted: true

# self-repairing. requires:

# - a heartbeat sensor

# - allow_fill set to true on the target server instance

#self_repair:

# heartbeat sensor, required

# any sensor which is changed frequently enough

#oid: sensor:NODENAME/heartbeat

# auto-repair interval (sec), if not set, only manual repair is available

#repair_interval: 600

# state history database service to check data in

#history_db_svc: default

# range to analyze (in seconds, e.g. 86400 - previous day)

#range: 86400

# telemetry gap size (sec), if a gap is higher, self-repairing

# is activated

#gap: 5

#server:

## collector service

#collector_svc: eva.zfrepl.default.collector

#mailboxes:

## mailbox for the node_remote

#node_remote:

## API key, required to open the mailbox

#key_id: default

# Allow filling mailbox from remote (required for self-repairing)

#allow_fill: true

user: eva

Create the service using eva-shell:

eva svc create eva.zfrepl.N.collector|replicator /opt/eva4/share/svc-tpl/svc-tpl-zfrepl.yml

or using the bus CLI client:

cd /opt/eva4

cat DEPLOY.yml | ./bin/yml2mp | \

./sbin/bus ./var/bus.ipc rpc call eva.core svc.deploy -

(see eva.core::svc.deploy for more info)

EAPI methods

See EAPI commons for the common information about the bus, types, errors and RPC calls.

client.self_repair

Description |

[replicator] Manually launch self-repair |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

node name |

yes |

range |

f64 |

override repair range (sec) |

no |

force |

bool |

force all mailbox fill tasks, ignore cache |

no |

client.start

Description |

[replicator] Trigger mailbox client startup |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

node name |

yes |

disable

Description |

[replicator] Disable replication and kill all running tasks |

Parameters |

none |

Returns |

nothing |

enable

Description |

[replicator] Enable replication |

Parameters |

none |

Returns |

nothing |

mailbox.delete_block

Description |

[collector] Delete a block |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name |

yes |

block_id |

String |

block ID |

yes |

mailbox.disable

Description |

[collector] Disable a mailbox |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name (* for all) |

yes |

mailbox.enable

Description |

[collector] Enable a mailbox |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name (* for all) |

yes |

mailbox.fill

Description |

[collector] Fill blocks from a local database service |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name |

yes |

db_svc |

String |

Database service name |

no |

t_start |

Time |

Starting time/timestamp (default: last 24 hours) |

no |

t_end |

Time |

Ending time/timestamp (default: now) |

no |

xopts |

Map<String,Any> |

extra options, passed to the database service as-is |

no |

mailbox.get_block

Description |

[collector] Get ready-to-replicate-block |

Parameters |

required |

Returns |

Block or nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name |

yes |

Return payload example:

{

"block_id": "mbb_1656445625",

"last": false,

"path": "/opt/eva4/runtime/zfrepl/spool/rtest1/mbb_1656445625"

}

mailbox.list

Description |

[collector] List mailboxes |

Parameters |

none |

Returns |

List of mailboxes |

Return payload example:

[

{

"enabled": true,

"name": "rtest1",

"path": "/opt/eva4/runtime/zfrepl/spool/rtest1"

},

{

"enabled": false,

"name": "rtest2",

"path": "/opt/eva4/runtime/zfrepl/spool/rtest2"

}

]

mailbox.list_blocks

Description |

[collector] List ready-to-replicate blocks |

Parameters |

required |

Returns |

Block list |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name |

yes |

Return payload example:

[

{

"block_id": "mbb_1656445625",

"path": "/opt/eva4/runtime/zfrepl/spool/rtest1/mbb_1656445625",

"size": 2983121

},

{

"block_id": "mbb_1656445635",

"path": "/opt/eva4/runtime/zfrepl/spool/rtest1/mbb_1656445635",

"size": 2916

}

]

mailbox.rotate

Description |

[collector] Delete all blocks in the mailbox |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

i |

String |

Mailbox name |

yes |

process_dir

Description |

[replicator/standalone] Process blocks from a local dir |

Parameters |

required |

Returns |

nothing |

Name |

Type |

Description |

Required |

path |

String |

Local path |

yes |

node |

String |

Source node name (any if not important) |

yes |

delete |

bool |

Delete processed blocks (r/w permissions required) |

no |

status

Description |

[replicator] Replication status |

Parameters |

none |

Returns |

Status payload |

Return payload example:

{

"active_clients": ["node1"],

"enabled": true

}